Dask based Flood Mapping#

Map floods with Sentinel-1 radar images. We replicate in this package the work

of Bauer-Marschallinger et al. (2022)[1] on the TU Wien Bayesian-based

flood mapping algorithm. This implementation is entirely based on

dask and data access via

STAC with

odc-stac. The algorithm requires

three pre-processed input datasets stored and accessible via STAC at the Earth

Observation Data Centre For Water Resources Monitoring (EODC). It is foreseen

that future implementations can also use data from other STAC catalogues. This

notebook explains how microwave backscattering can be used to map the extent of

a flood. The workflow detailed in this

notebook

forms the backbone of this package. For a short overview of the Bayesian decision

method for flood mapping see this

ProjectPythia book.

Installation#

To install the package, do the following:

pip install dask-flood-mapper

Usage#

Storm Babet hit the Denmark and Northern coast of Germany at the 20th of October 2023 Wikipedia. Here an area around Zingst at the Baltic coast of Northern Germany is selected as the study area.

Local Processing#

Define the time range and geographic region in which the event occurred.

time_range = "2022-10-11/2022-10-25"

bbox = [12.3, 54.3, 13.1, 54.6]

Use the flood module and calculate the flood extent with the Bayesian decision

method applied tp Sentinel-1 radar images. The object returned is a

xarray with lazy loaded Dask arrays. To

get the data in memory use the compute method on the returned object.

from dask_flood_mapper import flood

flood.decision(bbox=bbox, datetime=time_range).compute()

Distributed Processing#

It is also possible to remotely process the data at the EODC Dask Gateway with the added benefit that we can then process close to the data source without requiring rate-limiting file transfers over the internet.

For ease of usage of the Dask Gateway install the

eodc package besides the dask-gateway

package. Also, see the

EODC documentation.

pip install dask-gateway eodc

# or use pipenv

# git clone https://github.com/interTwin-eu/dask-flood-mapper.git

# cd dask-flood-mapper

# pipenv sync -d

However differences in versions client- and server-side can cause problems. Hence, the most convenient way to successively use the EODC Dask Gateway is Docker. To do this clone the GitHub repository and use the docker-compose.yml.

git clone https://github.com/interTwin-eu/dask-flood-mapper.git

cd dask-flood-mapper

docker compose up

Copy and paste the generated URL to launch Jupyter Lab in your browser. Here one can run the below code snippets or execute the notebook about remote processing.

from eodc.dask import EODCDaskGateway

from eodc import settings

from rich.prompt import Prompt

settings.DASK_URL = "http://dask.services.eodc.eu"

settings.DASK_URL_TCP = "tcp://dask.services.eodc.eu:10000/"

Connect to the gateway (this requires an EODC account).

your_username = Prompt.ask(prompt="Enter your Username")

gateway = EODCDaskGateway(username=your_username)

Create a cluster.

[!CAUTION] Per default no worker is spawned, therefore please use the widget to add/scale Dask workers in order to enable computations on the cluster.

cluster_options = gateway.cluster_options()

cluster_options.image = "ghcr.io/eodcgmbh/cluster_image:2025.4.1"

cluster = gateway.new_cluster(cluster_options)

client = cluster.get_client()

cluster

Map the flood the same way as we have done when processing locally.

flood.decision(bbox=bbox, datetime=time_range).compute()

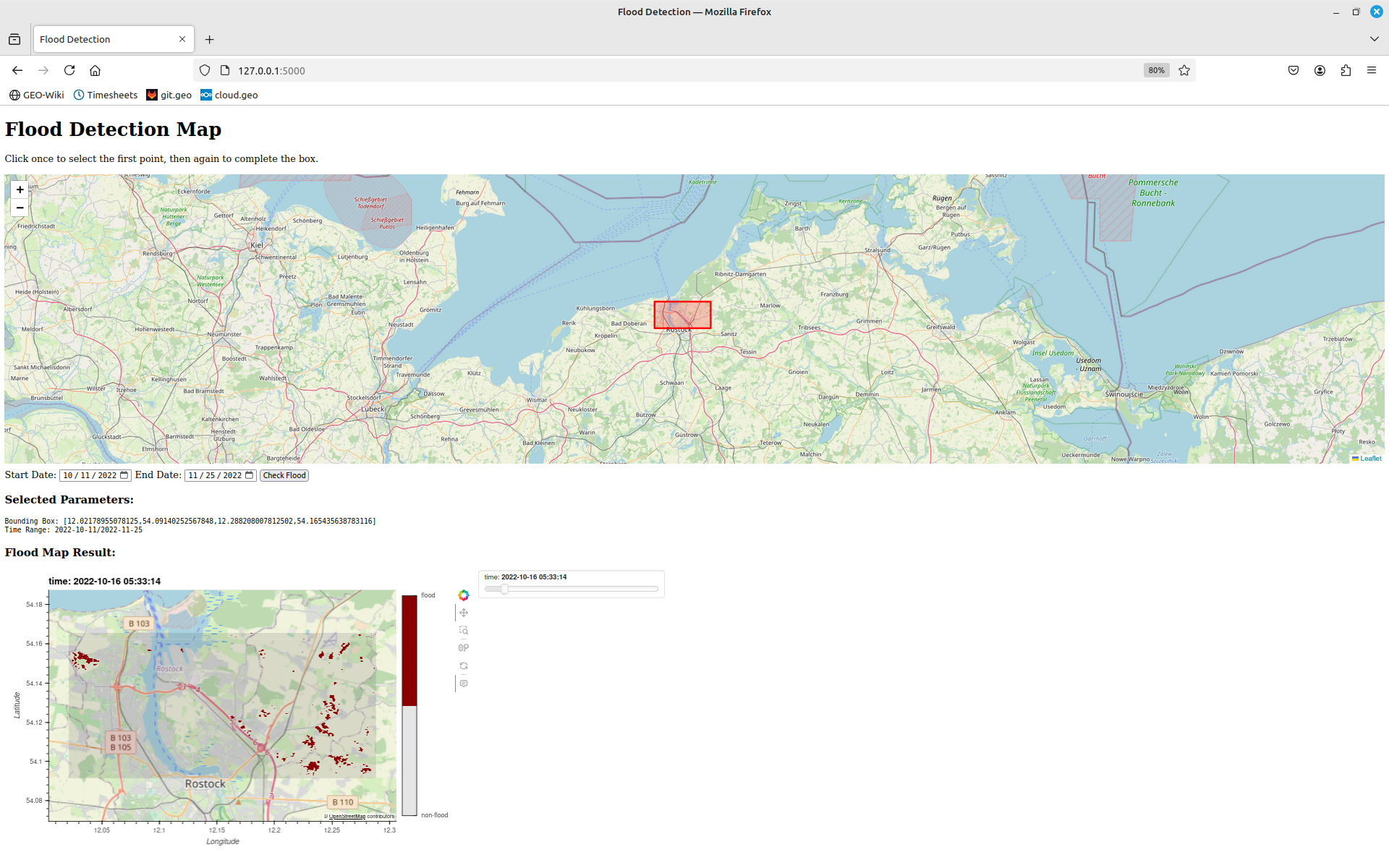

User Interface#

It is also possible to run the workflow in an user-friendly interface, as shown below:

Firstly, install the extra packages with:

pip install dask-flood-mapper[app]

Then, to access it, simplify run the in terminal the command:

floodmap

It will open the GUI in the web browser.

Contributing Guidelines#

Please find the contributing guidelines in the specific file CONTRIBUTING.md.

Automated Delivery#

This repository holds a container image to be used for running Dask based flood

mapping on the EODC Dask Gateway. Use the URL

ghcr.io/intertwin-eu/dask-flood-mapper:latest to specify the image.

docker pull ghcr.io/intertwin-eu/dask-flood-mapper:latest

Credits#

Credits go to EODC (https://eodc.eu) for developing the infrastructure and the management of the data required for this workflow. This work has been supported as part of the interTwin project (https://www.intertwin.eu). The interTwin project is funded by the European Union Horizon Europe Programme - Grant Agreement number 101058386.

Views and opinions expressed are however those of the authors only and do not necessarily reflect those of the European Union Horizon Europe/Horizon 2020 Programmes. Neither the European Union nor the granting authorities can be held responsible for them.

License#

This repository is covered under the MIT License.