Deploy interLink virtual nodes

Learn how to deploy interLink virtual nodes on your cluster. In this tutorial you are going to setup all the needed components to be able to either develop or deploy the plugin for container management on a remote host via a local kubernetes cluster.

The installation script that we are going to configure will take care of providing you with a complete Kubernetes manifest to instantiate the virtual node interface. Also you will get an installation bash script to be executed on the remote host where you want to delegate your container execution. That script is already configured to automatically authenticate the incoming request from the virtual node component, and forward the correct instructions to the openAPI interface of the interLink plugin (a.k.a. sidecar) of your choice. Thus you can use this setup also for directly developing a plugin, without caring for anything else.

For a complete guide on all the possible scenarios, please refer to the Cookbook.

Requirements

- kubectl host: an host with MiniKube installed and running

- A GitHub account

- remote host: A "remote" machine with a port that is reachable by the MiniKube host

Create an OAuth GitHub app

In this tutorial GitHub tokens are just an example of authentication mechanism, any OpenID compliant identity provider is also supported with the very same deployment script, see examples here.

As a first step, you need to create a GitHub OAuth application to allow interLink to make authentication between your Kubernetes cluster and the remote endpoint.

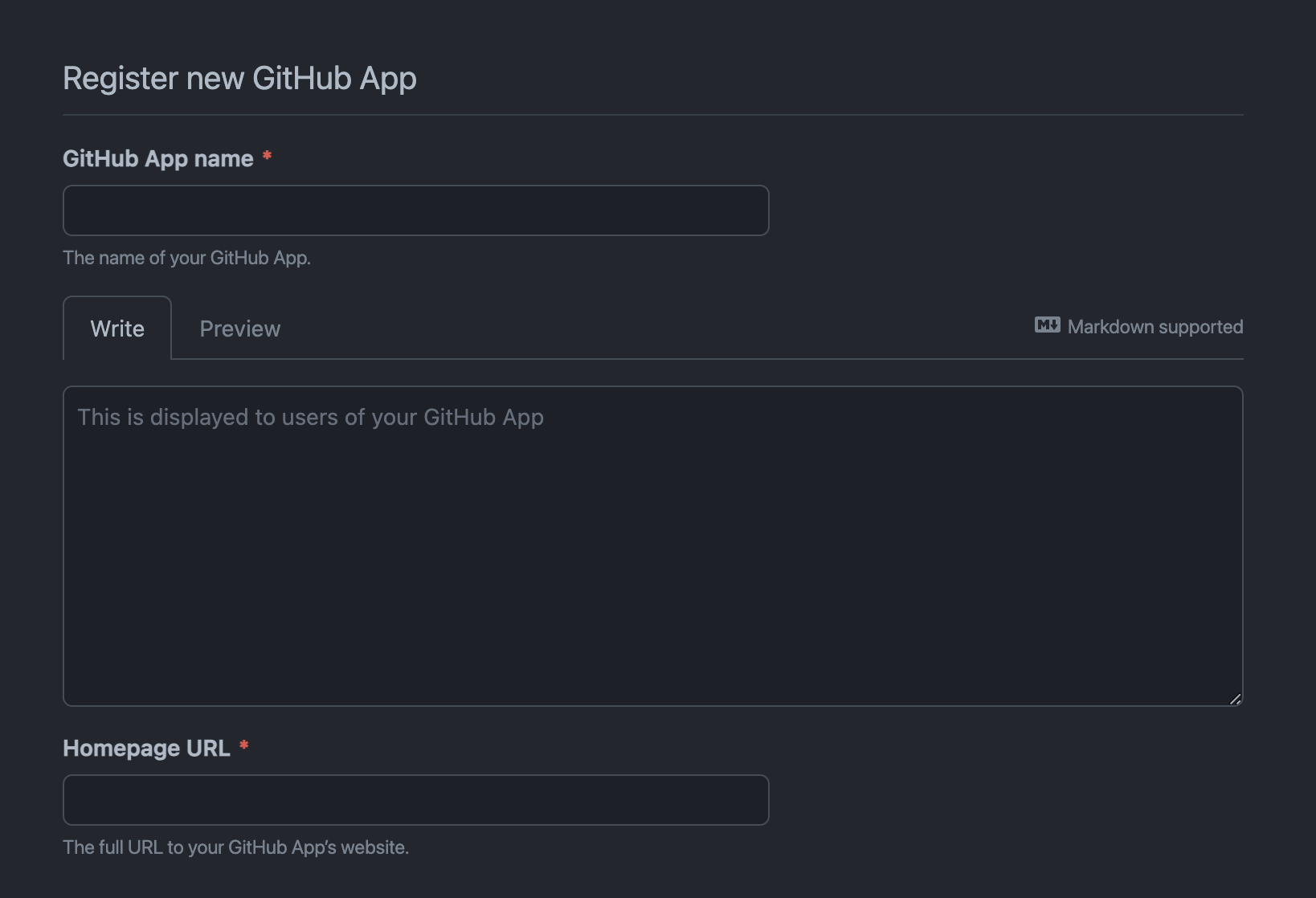

Head to https://github.com/settings/apps and click on New GitHub App. You should now be looking at a form like this:

Provide a name for the OAuth2 application, e.g. interlink-demo-test, and you can skip the description, unless you want to provide one for future reference.

For our purpose Homepage reference is also not used, so fill free to put there https://intertwin-eu.github.io/interLink/.

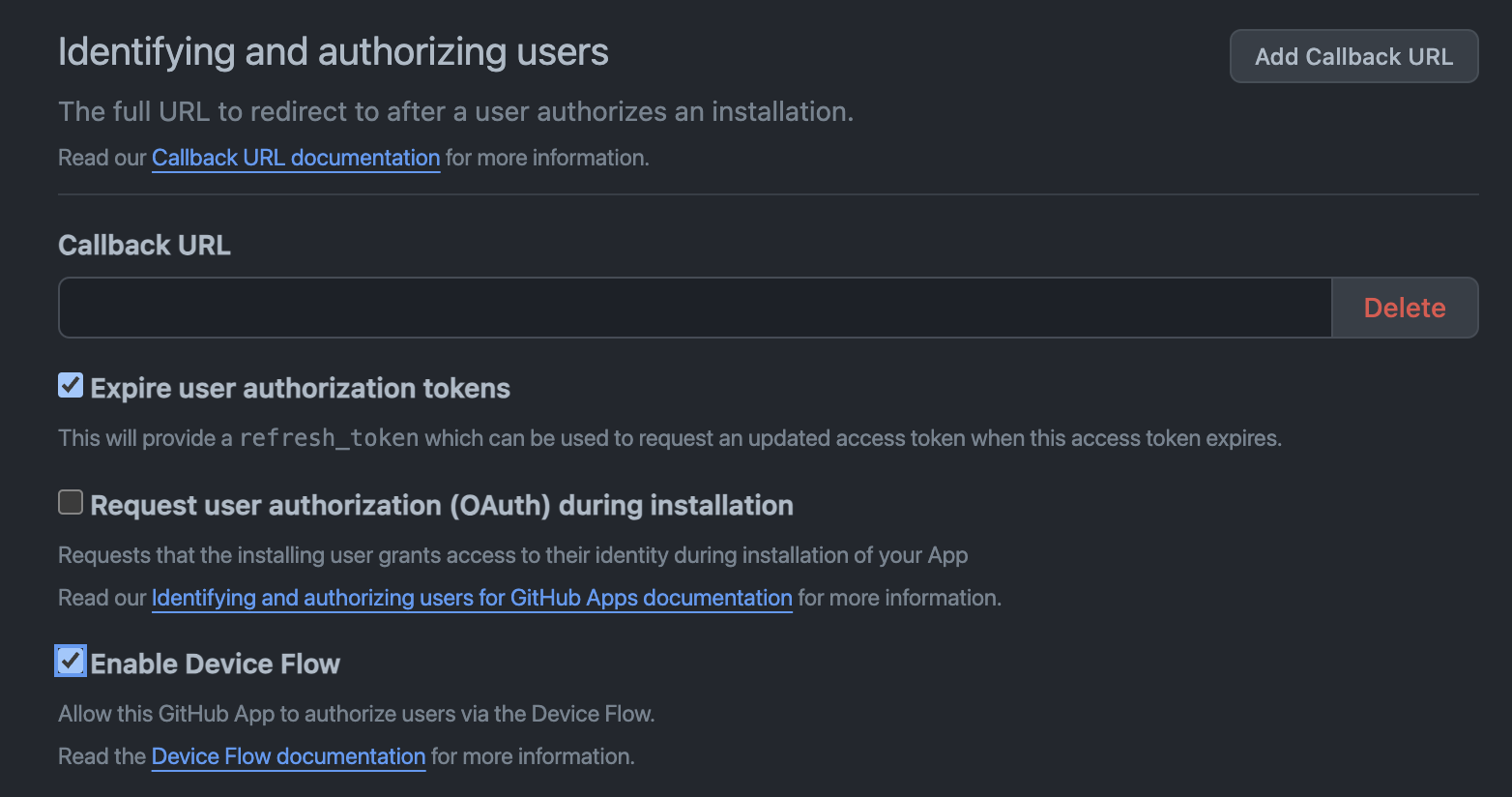

Check now that refresh token and device flow authentication:

Disable webhooks and save clicking on Create GitHub App

You can click then on your application that should now appear at https://github.com/settings/apps and you need to save two strings: the Client ID and clicking on Generate a new client secret you should be able to note down the relative Client Secret.

Now it's all set for the next steps.

Configuring your virtual kubelet setup (remote host)

Login into the machine and and download the interLink installer CLI for your OS and processor architecture from the release page, looking for the binaries starting with interlink-installer. For instance, if on a Linux platform with x86_64 processor:

export VERSION=$(curl -s https://api.github.com/repos/intertwin-eu/interlink/releases/latest | jq -r .name)

wget -O interlink-installer https://github.com/interTwin-eu/interLink/releases/download/$VERSION/interlink-installer_Linux_x86_64

chmod +x interlink-installer

The CLI offers a utility option to initiate an empty config file for the installation at $HOME/.interlink.yaml:

./interlink-installer --init

You are now ready to go ahead and edit the produced file with all the setup information.

Let's take the following as an example of a valid configuration file:

see release page to get the latest one! And change the value accordingly!

If you follow the steps above, echo $VERSION should be enough to get the correct value.

interlink_ip: x.x.x.x

interlink_port: 30443

interlink_version: 0.3.3

kubelet_node_name: my-node

kubernetes_namespace: interlink

node_limits:

cpu: "10"

memory: 256

pods: "10"

oauth:

provider: github

issuer: https://github.com/oauth

grant_type: authorization_code

scopes:

- "read:user"

github_user: "dciangot"

token_url: "https://github.com/login/oauth/access_token"

device_code_url: "https://github.com/login/device/code"

client_id: "XXXXXXX"

client_secret: "XXXXXXXX"

insecure_http: true

This config file has the following meaning:

- the remote components (where the pods will be "offloaded") will listen on the ip address

x.x.x.xon the port30443 - deploy all the components from interlink release 0.3.3 (see release page to get the latest one)

- the virtual node will appear in the cluster under the name

my-node - the in-cluster components will run under

interlinknamespace - the virtual node will show the following static resources availability:

- 10 cores

- 256GiB RAM

- a maximum of 10 pods

- the cluster-to-interlink communication will be authenticated via github provider, with a token with minimum capabilities (scope

read:useronly), and only the tokens for userdciangotwill be allowed to talk to the interlink APIs token_urlanddevice_code_urlshould be left like that if you use GitHubcliend_idandclient_secretnoted down at the beginning of the tutorial

You are ready now to go ahead generating the needed manifests and script for the deployment.

Deploy the interLink core components (remote host)

Login into the machine and generate the manifests and the automatic interlink installation script with:

./interlink-installer

follow the instruction to authenticate with the device code flow and, if everything went well, you should get an output like the following:

please enter code XXXX-XXXX at https://github.com/login/device

=== Deployment file written at: /Users/dciangot/.interlink/interlink.yaml ===

To deploy the virtual kubelet run:

kubectl apply -f /Users/dciangot/.interlink/interlink.yaml

=== Installation script for remote interLink APIs stored at: /Users/dciangot/.interlink/interlink-remote.sh ===

Please execute the script on the remote server: 192.168.1.127

"./interlink-remote.sh install" followed by "interlink-remote.sh start"

Start installing all the needed binaries and configurations:

chmod +x ./.interlink/interlink-remote.sh

./.interlink/interlink-remote.sh install

By default the script will generate self-signed certificates for your ip adrress. If you want to use yours you can place them in ~/.interlink/config/tls.{crt,key}.

Now it's time to star the components (namely oauth2_proxy and interlink API server):

./interlink-remote.sh start

Check that no errors appear in the logs located in ~/.interlink/logs. You should also start seeing ping requests coming in from your kubernetes cluster.

To stop or restart the components you can use the dedicated commands:

./interlink-remote.sh stop

./interlink-remote.sh restart

N.B. you can look the oauth2_proxy configuration parameters looking into the interlink-remote.sh script.

N.B. logs (expecially if in verbose mode) can become pretty huge, consider to implement your favorite rotation routine for all the logs in ~/.interlink/logs/

Attach your favorite plugin or develop one! (remote host)

Next chapter will show the basics for developing a new plugin following the interLink openAPI spec.

In alterative you can start an already supported one.

Remote SLURM job submission

Note that the SLURM plugin repository is: github.com/interTwin-eu/interlink-slurm-plugin

Requirements

- a slurm CLI available on the remote host and configured to interact with the computing cluster

- a sharedFS with all the worker nodes

- an experimental feature is available for cases in which this is not possible

Configuration

-

Create utility folders

mkdir -p $HOME/.interlink/logs

mkdir -p $HOME/.interlink/bin

mkdir -p $HOME/.interlink/config -

Create a configuration file (remember to substitute

/home/username/with your actual home path):./interlink/manifests/plugin-config.yamlSocket: "unix:///home/myusername/plugin.sock"

InterlinkPort: "0"

SidecarPort: "0"

CommandPrefix: ""

DataRootFolder: "/home/myusername/.interlink/jobs/"

BashPath: /bin/bash

VerboseLogging: false

ErrorsOnlyLogging: false

SbatchPath: "/usr/bin/sbatch"

ScancelPath: "/usr/bin/scancel"

SqueuePath: "/usr/bin/squeue"

SingularityPrefix: ""- More on configuration options at official repo

You are almost there! Now it's time to add this virtual node into the Kubernetes cluster!

Before going ahead, put the correct DataRootFolder in the example above! Don't forget the / at the end!

Systemd installation

To get the latest version of the plugin, please visit the release page.

Download the latest release binary in $HOME/.interlink/bin/plugin

export PLUGIN_VERSION=$(curl -s https://api.github.com/repos/intertwin-eu/interlink-slurm-plugin/releases/latest | jq -r .name)

wget -O $HOME/.interlink/bin/plugin https://github.com/interTwin-eu/interlink-slurm-plugin/releases/download/${PLUGIN_VERSION}/interlink-sidecar-slurm_Linux_x86_64

Now you can create a systemd service on the user space with the following:

mkdir -p $HOME/.config/systemd/user

cat <<EOF > $HOME/.config/systemd/user/slurm-plugin.service

[Unit]

Description=This Unit is needed to automatically start the SLURM plugin at system startup

After=network.target

[Service]

Type=simple

ExecStart=$HOME/.interlink/bin/slurm-plugin

Environment="SLURMCONFIGPATH=$HOME/.interlink/config/slurm.yaml"

Environment="SHARED_FS=true"

StandardOutput=file:$HOME/.interlink/logs/plugin.log

StandardError=file:$HOME/.interlink/logs/plugin.log

[Install]

WantedBy=multi-user.target

EOF

systemctl --user daemon-reload

systemctl --user enable slurm-plugin.service

An eventually starting and monitoring with start and status:

systemctl --user start slurm-plugin.service

systemctl --user status slurm-plugin.service

Logs will be stored at $HOME/.interlink/logs/plugin.log.

Create UNICORE jobs to run on HPC centers

UNICORE (Uniform Interface to Computing Resources) offers a ready-to-run system including client and server software. UNICORE makes distributed computing and data resources available in a seamless and secure way in intranets and the internet.

Remote docker execution

An mantained plugin will come soon... In the meantime you can take a look at the "developing a plugin" example.

Submit pods to HTcondor or ARC batch systems

Coming soon

Remote Kubernetes Plugin

InterLink plugin to extend the capabilities of existing Kubernetes clusters, enabling them to offload workloads to another remote cluster. The plugin supports the offloading of PODs that expose HTTP endpoints (i.e., HTTP Microservices).

Deploy the interlink Kubernetes Agent (kubeclt host)

You can now install the helm chart with the preconfigured (by the installer script) helm values in ./interlink/manifests/values.yaml

helm upgrade --install \

--create-namespace \

-n interlink \

my-node \

oci://ghcr.io/intertwin-eu/interlink-helm-chart/interlink \

--values ./interlink/manifests/values.yaml

You can fix the version of the chart by using the --version option.

Check that the node becomes READY`` after some time, or as soon as you see the pods in namespace interlink` running.

You are all setup, congratulations! To start debugging in case of problems we suggest starting from the pod containers logs!

Test your setup

Please find a demo pod to test your setup here.